AI Risk Assessment: Everything You Need to Know

AI applications increasingly operate in production environments where incorrect outputs carry financial, legal, and safety consequences. Risk assessment of AI applications focuses on how data pipelines, model behavior, deployment architecture, and user interaction introduce failure modes over time.

AI systems undergo changes as data distributions shift, and models undergo retraining, unlike traditional applications. After deployment, empirical evidence shows that performance drops, bias increases, and security risks rise. Conventional software risk approaches fail to capture probabilistic behavior and opaque decision logic.

AI risk assessment establishes controls to detect, measure, and mitigate these risks across the application lifecycle. This content is intended for AI leaders, architects, engineers, and risk professionals responsible for deploying and operating AI systems in high-impact environments.

What Is AI Risk Assessment?

AI risk assessment is the systematic process to identify, evaluate, and prioritize risks arising from AI systems across their lifecycle (design, deployment, and use).

Unlike static risk reviews, it accounts for probabilistic outputs, non-deterministic behavior, and post-deployment drift. It helps examine how training data quality, model behavior, system integration, and deployment context create failure modes under real operating conditions.

AI Risk Assessment Through a TRiSM Lens

Trust: Can the system be relied on?

Includes data provenance, model performance stability, explainability, versioning, and monitoring of behavior drift.

Risk: What is the impact if it fails?

Evaluates likelihood, blast radius, business tolerance, regulatory exposure, and downstream system impact.

Security: Can the system be abused?

Covers prompt injection, training data leakage, model extraction, unsafe tool execution, agent permission boundaries, and access control failures.

Modern AI governance requires technical controls, not policy statements. The TRiSM lens frames AI risk as enforceable system properties.

Difference Between AI Risk and Traditional Software Risk

Traditional Software Risk | AI Risk (Fundamentally Different) |

|

|

Why Are AI Risk Assessments Important?

AI risk assessments are critical because AI applications introduce non-deterministic, data-driven failure modes that evade traditional software validation. Offline metrics collapse under distribution shift, feedback loops, proxy learning, and out-of-distribution inputs, causing undetected performance degradation in production.

AI systems operate as tightly coupled pipelines where data ingestion, feature engineering, inference, and post-processing amplify upstream errors across thousands of decisions. Without a risk assessment, failure of severity, likelihood, and blast radius remains unknown.

Risk assessment forces explicit evaluation of robustness, uncertainty calibration, explainability constraints, and security exposure. It also defines enforceable operational controls such as drift detection, retraining triggers, rollback thresholds, and auditability. Without this discipline, AI systems remain statistically fragile, operationally opaque, and legally indefensible.

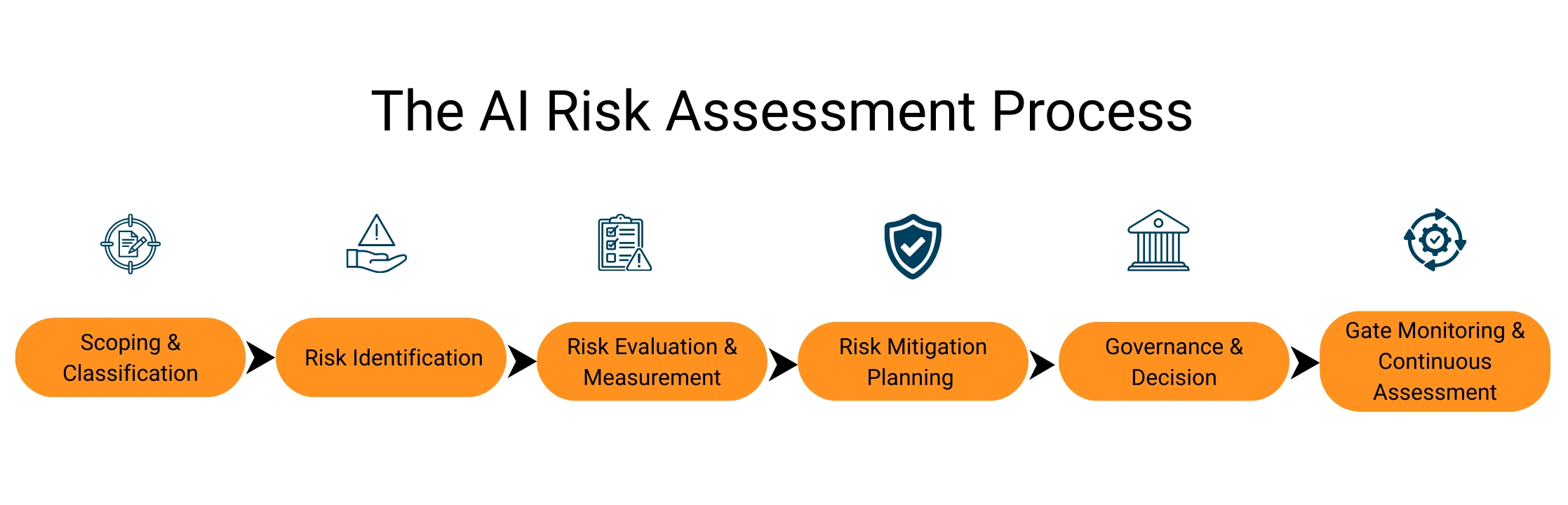

The AI Risk Assessment Process

AI risk assessment is a continuous control process, not a one-time review.

Mature teams enforce AI risk through a control plane that spans pre-production, runtime, and post-production stages of the system lifecycle.

AI Risk Control Layers

- Pre-production gates: Model evaluations, security testing, and approval thresholds enforced before deployment.

- Runtime enforcement: AI gateway and runtime defenses, guardrails, rate limits, and policy checks applied during live operation.

- Postproduction oversight: Continuous monitoring, drift detection, incident response, and risk reporting after release.

The steps below outline how mature teams identify, measure, and control risk across the full AI system lifecycle.

- Scoping and Classification

Risk control starts with making system intent explicit. Teams must define what the model does, how autonomous it is, and what happens when it fails. In practice, this means encoding risk level, decision type, and human override expectations directly into model metadata and deployment configuration.

- Risk Identification

Risk identification defines how the AI system can fail in production. This includes model risks such as data leakage, bias, confidence miscalibration, adversarial inputs, and fragile behavior in rare states. It also includes system risks introduced by agents and integrations, including overprivileged agent or tool access, direct and indirect prompt injection, unsafe MCP or tool exposure, and data oversharing through embedded or external AI usage.

If agent and tool risks are missing, the assessment reflects legacy ML systems, not modern AI platforms.

- Risk Evaluation and Measurement

Once risks are identified, they must be continuously measurable and enforceable. Accuracy alone is irrelevant.

Teams run continuous evaluations across quality, security, and policy compliance. Metrics must reflect real impact, including calibration error, cohort-level performance deltas, drift magnitude, latency sensitivity, downstream error propagation, and misuse signals.

Evaluation results are enforced through promotion gates in CI/CD. Models that fail evaluation thresholds do not advance to deployment, regardless of delivery pressure. This is the operational bridge between AI governance and engineering execution.

- Risk Mitigation Planning

Mitigation is where risk assessment becomes real engineering. Controls must be executable. Confidence thresholds, abstention logic, fallback models, feature constraints, human-in-the-loop gates, and retraining triggers are all examples of enforceable mitigations.

- Governance and Decision Gate

Deployment must be conditional on residual risk, not engineering momentum. Release decisions should be gated by explicit risk criteria that determine whether a system can ship, ship with constraints, or be blocked entirely. These gates must be enforced through deployment pipelines, not post-hoc review.

- Monitoring and Continuous Assessment

Monitor what breaks into production. Monitor drift, calibration decay, anomaly rates, and incident frequency. Trigger retraining, rollback, or shutdown automatically when thresholds are crossed.

Operationalizing AI Risk Assessment: Practical Strategies and Best Practices

Merely understanding the AI risk assessment process is not enough. The real challenge lies in applying the right strategies and best practices that allow teams to assess risk consistently across real AI applications.

This is the focus of our upcoming webinar, Strategies and Best Practices for Risk Assessment in AI Apps. The session moves beyond frameworks to show how teams operationalize AI risk assessment through enforceable controls, runtime governance, evaluation gates, and protection against agent- and tool-level risks.

The panel includes Neema Wasira-Johnson, founder and CEO of Asili Advisory Group; Sai Rakshith, cybersecurity solutions consultant at DivIHN Integration Inc; and Yawar Kamran, consultant at DivIHN Integration Inc.

Together, we discuss how teams translate risk assessment into enforceable controls, decision gates, and ongoing monitoring for AI systems in production.

Conclusion: AI Risk Is a Control Problem

AI risk is not a compliance issue, and it is not solved by documentation or policy statements. It is a control problem. Once deployed, AI systems operate autonomously, adapt to changing data, and influence decisions at a scale no human process can supervise directly. The primary risk is not that models are imperfect, but that organizations lose the ability to observe, intervene, and justify system behavior under change.

Effective AI risk assessment establishes control boundaries. It defines where autonomy is allowed, which signals indicate loss of control, and when intervention is mandatory. These controls must be enforced through engineering systems, not governance checklists.

Organizations that treat AI risk as compliance accumulate invisible operational and legal exposure. Those that treat it as a control discipline retain the ability to deploy AI safely, scale responsibly, and remain accountable when systems inevitably fail.

Other Popular Articles

In the digital age, businesses must adopt an ad

GRC is the capability, or integrated collection